In machine learning, matrix multiplication is one of the most critical operations. It is also computationally expensive and requires extensive use of multiply-add instructions, making it difficult to perform on a GPU or other accelerated hardware efficiently enough for large-scale application.

It poses a problem because many studies have focused their efforts on developing algorithms; that estimate mathematically correct multiplies effectively!

Matrix multiplication is a crucial component of many machine learning applications, but it can slow CPUs. GPUs and TPU chips have faster matrix multipliers than regular processors because they will execute multiple math operations in parallel, perfecting them for ML purposes.

However, these expensive or unavailable technologies may not always work as desired by researchers who need tight budgets. Due to being constrained with resources such as IoT devices that require low-cost solutions instead but still rely heavily upon high-performance computing capabilities too!

The outcome of the Research

Researchers at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) have formed a different algorithm to perform machine learning in parallel matrix multiplication.

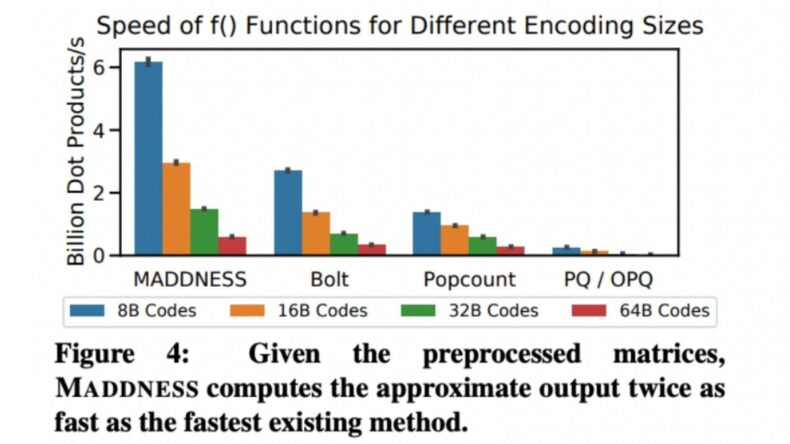

This method, called Multiply-ADDitioN-lESS (MADDNESS), needs zero times of the regular round-off display yet however operates 10x faster than other organisations employing AMM with 100X less power dissipation!

The Experiment

The team described MADDNESS and a set of experiments in which they optimise for coding rates. Unlike different algorithms that do multiply-add operations, this unique path to optimisation works only one CPU thread by applying learned mixture functions with significant efficiency up to 100GB/second on an Intel i7 processor core at decoder speed; impressive!

In their paper, the authors present a classification for computing dot products that are much more effective than other methods. In addition, it can produce an upper bound on an error and provide good speed while still being computationally strong enough with the need for efficiency when compared against alternative algorithms in some cases!

What were the results?

In a set of experiments examining the performance of MADDNESS against other algorithms in an image classifier, researchers discovered that it has a more considerable speed-accuracy trade-off.

It achieves “virtually” the same accuracy with 10x faster processing time than exact multiplication, which is usually used as a baseline. For evaluation metrics like classification or detection rates at scale levels needed by industry giants such as Google maps app, millions of live user videos are processed simultaneously on-demand 24/7, 365 days per year.

The use

Machine learning researchers have lately shifted their awareness to the use of GPUs and TPUs for matrix multiplication, as this is one operation that can be performed many times faster than on CPUs.

However, these chips may not always be convenient or affordable due to either budget constraints or resource limitations within an application like IoT, which needs low-cost processors over excellent precision measurements.

As such, some options are getting reviewed by academia where accuracy is not needed. Instead, rapidity counts most; the trade-off between processor speed versus agreement will provide these systems reach more broadly without costly hardware investment required before now.

The definition

The MADDNESS algorithm is a machine learning optimisation method that makes several assumptions regarding its input. For case, “the matrices are tall and relatively dense,” which happens regularly in image classifier models like those discovered on deep neural networks or primal-dual algorithms.

For example, one matrix contains fixed values while another represents data (image elements).

Benefits

MADDNESS is the first technique to produce fast hashes that do not require multiply-add operations. It is based on binary regression trees, with 16 leaves factoring hash buckets for each tree and input vector mapped straight onto their prototype using comparison only against threshold values at cracks in these branches. It makes it much faster than another technique!

The MADDNESS algorithm uses an additional method to optimise the reconstruction error of a matrix. It gets accomplished by choosing prototypes that reduce said estimation and then taking 8-bit aggregations with hardware-specific directions, preferably of additions or multiplies, when connecting multiple products into one result vector!

The AMM algorithms are all very similar, but MADDNESS outperformed them.

The researchers analysed six other popular machine learning systems to see which one would work most suitable for their image classifier trained on CIFAR datasets- it turned out that only Mahout’s algorithm could provide improved outcomes!