Automation is everywhere, but it has a unique effect when it comes to written content. Automated text is no longer an experiment; it’s actively shaping what people read every day.

Some love the efficiency it brings, others worry about the impact it could have on accuracy and the trustworthiness of information.

Is the news you consume written by a machine? If it is, how does that change what you believe and trust? More importantly, how can you recognize automated writing when you see it?

Here are the key points to keep in mind before exploring the issue in depth:

- Automated content production is on the rise, especially in news.

- There are real concerns about accuracy and bias.

- Spotting automated text can be difficult, but there are methods.

- AI-generated writing lacks a human touch and emotional insight.

- Tools exist to check if text is generated by AI.

The Rise of Automated Text in News

Newsrooms across the world use automation for content creation. Major outlets rely on algorithms to produce financial reports, sports results, and even basic articles. The benefits are clear: faster production and the ability to process massive amounts of information instantly.

However, automated articles often lack depth, context, and insight. The tone can feel generic, and the content sometimes misses subtle nuances. In a world where many people seek detailed and thoughtful analysis, relying solely on automated text can leave gaps in understanding.

The core issue comes down to quality. Automated systems excel in producing content that informs, but not necessarily in a way that resonates on a personal level. Machines process data and spit out a product, but they don’t truly |”think” about what they’re writing.

Accuracy and Bias in Automated Text

One of the major concerns with automated writing in news is accuracy. An algorithm can make mistakes, especially when interpreting large datasets. In some cases, automation might pull incorrect data or misinterpret statistics. News based on flawed data leads to false conclusions. If people don’t notice these errors, the spread of misinformation becomes a significant problem.

Bias is another risk. Although machines don’t hold opinions, they learn from the data fed to them. If that data contains bias, the text will reflect it. Human oversight is needed to ensure that output remains neutral. Without that oversight, the potential for skewed or incomplete reporting grows.

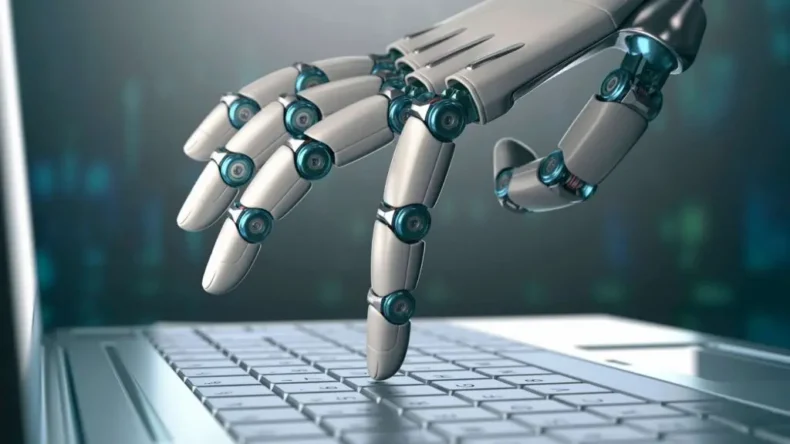

How to Spot Automated Text in News

Detecting automated text can be difficult, especially since the technology is designed to produce content that looks human-made. Still, several signs can help readers identify whether they’re looking at something a machine wrote.

Repetition is often a giveaway. Automated text tends to follow predictable patterns. Sentences may feel too structured or repeat the same phrases. Readers should also look out for articles that lack depth. If the content feels like it’s only touching the surface or missing critical analysis, automation may be at work.

Another tool for spotting machine-generated text is an AI checker like ZeroGPT. These tools analyze content and flag patterns that are common in automated text. It’s not always foolproof, but it can offer some insight into whether or not an article was created by a machine.

Missing the Human Touch

Machines can compile and regurgitate data, but they lack a human element. News often involves complex stories that require emotional understanding or human insight. Automated systems can’t feel empathy, make value judgments, or connect with a reader on a personal level.

Human writers bring nuance, subtlety, and emotional depth to their work. A human touch is necessary for a comprehensive understanding of complex issues. In automated text, you may notice a lack of personality or voice.

There’s a difference between a human writing with experience and a machine simply arranging words. The absence of this personal connection is often a clear indicator of automated content.

Consequences of Relying on Automated Text

Relying too much on automated writing for news could have long-term effects on how information is consumed and trusted. With less human involvement, there’s the possibility of losing nuance and depth in reporting. A machine-generated article may present the facts, but without context, those facts lose meaning.

For example, consider an automated article on a natural disaster. It may include statistics on casualties, the extent of the damage, and predictions on recovery.

But it won’t capture the emotional toll, the fear, the community impact, or the resilience of those affected. Machines can’t interpret the emotional weight behind data. People reading automated content may find themselves less informed about the real-life implications of major events.

Over time, heavy reliance on automated writing could also reduce diversity in content. Since machines rely on predefined patterns and data sets, there’s a risk of homogenizing information. Less variety means fewer perspectives, which limits readers’ understanding of complex global issues.

The Importance of Media Literacy

As automation continues to influence writing in news, it becomes essential for readers to stay informed and critical. People should question the source of what they read and think about how it was created. It’s also important to recognize the role of automation in shaping content and how that might influence its reliability.

Informed readers can recognize the limitations of automated content and actively seek human-generated stories for a more comprehensive view.

Media literacy involves recognizing potential biases and understanding that not all content is created equally. Staying aware of how content is produced will help people avoid the pitfalls of automated news and make more informed decisions.

Human Oversight and the Future of News

Automation isn’t going anywhere. It’s becoming more common in industries that require fast, efficient output, and news is no exception. But human oversight remains necessary. Machines can’t capture every nuance, and they certainly can’t replace human judgment.

There is value in letting machines handle certain tasks—like summarizing financial reports or generating basic news articles. But for complex reporting, human insight is irreplaceable.

The future of news may involve a balance: machines handling the more straightforward tasks, while humans focus on analysis, perspective, and the stories that require emotional depth.

This balance could maintain speed and efficiency while ensuring accuracy and preserving the human element. The challenge lies in making sure automation doesn’t dominate the process entirely.

The role of human editors and writers will remain vital to producing content that informs, engages, and connects with people on a deeper level.

Conclusion

Automated text has its place in the fast-paced world of news, but its limitations are clear. Machines produce efficient content, but they lack the depth, insight, and personal connection that only human writers can offer. Spotting automated text requires vigilance.

Recognizing patterns, using AI checkers, and staying critical of surface-level writing can help readers navigate a landscape increasingly influenced by automation.

The future of news should strike a balance. Automation can improve efficiency, but it must be complemented by human oversight to preserve accuracy, emotion, and connection. As technology continues to evolve, the need for media literacy becomes more important. Readers need to stay informed, question the sources of content, and seek human-made stories for the fuller picture.

Maintaining that balance ensures that the information people consume stays accurate, trustworthy, and human.