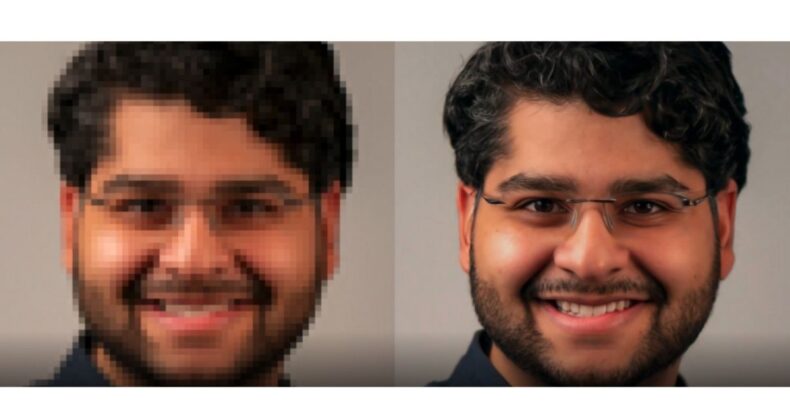

Admit it or not, we’ve all sometimes desired productive ways to render a low-resolution nostalgic image clicked by an inferior camera lens several years ago to a relatively higher resolution with distinct features.

Well, this may no longer be an impossible wishful dream as researchers at Google’s Research Brain Team have unveiled two revolutionary new models for enhancing the quality of photographs and videos.

The two models, collectively termed “diffusion models”, comprise image super-resolution (SR3) and cascaded diffusion model (CDM).

These leverage the prowess of AI (artificial intelligence) to generate high-fidelity pictures, thereby significantly upscaling the quality.

Although the research for these models dates back to 2015, the subsequent revival after a brief spell of dormancy was attributable to better training stability and encouraging results on image and audio genesis.

How Do They Work?

SR3

Image super-resolution, coined as SR3 by the researchers working on it, has the basic principle of taking images as input and then adding noise to them.

It essentially entails “corrupting” the images until they are rendered with completely “pure noise” in its high-res form.

What then follows is a reversal of this process as the machine learns to eliminate this noise and reach a specific target distribution.

It has wielded strong performance while upscaling a low-res image to high-res by a factor of approximately 4-8. Another advantage that can be seen was that the models could be superimposed to improvise the scaling aspect.

For instance, we can achieve a resolution of 64×64 → 1024×1024 by superimposing 64×64 → 256×256 and 256×256 → 1024×1024 together.

SR3 V/S Other Methods

SR3 compared with the other existing algorithms by performing an experiment known as the Two Alternative Forced Choice Experiment.

Human subjects were to rate a set of images produced by the different algorithms on what they considered to have been taken from a professional camera.

Subsequently, the confusion rate measures the percentage of time the raters showed a preference for model output images over reference images.

The results were astonishing as they showed the technical superiority of the SR3 model.

CDM (Class-Conditional ImageNet Generation)

It is a model trained on data extricated from the database of ImageNet for the creation of high-res photos and images. ImageNet happens to be a “difficult, high-entropy” dataset signifying a large amount of randomness.

As a result, CDM is developed as a diffusion model with multiple layers/models of other diffusion models.

A cascading pipeline of several models was found to be very effective. It chained and combined with a diffusion model and a set series of SR3 models, which produced images of low-res and consecutively high-res, respectively.

It used an axiom established in previous studies of autoregressive models and VQ-VAE-2 that cascading can facilitate quality and training speed.

Condition augmenting, a developing technique of data augmentation, further enhanced the quality of the sample results of CDM. This was to overcome a problem that was induced while working with the super-resolution models.

While training, these models took samples of original images of sufficiently high-res but during testing or performing the actual procedure of image enrichment, they’d do the same on pictures of low-res quality being produced by a diffusion model.

This introduced a test-train mismatch, which condition augmenting eliminates by augmenting low-res images in the cascading pipeline for better processing.

These augmentations comprise Gaussian blur and noise, deterring overfitting to lower-res input.

CDM (Class-Conditional ImageNet Generation) v/s Other Methods

It trumps models such as BigGAN-deep and VQ-VAE-2 in a metric Fréchet inception distance (FID), which can assess the quality of images by a GAN and Classification Accuracy Score.

The high-fidelity images are superior and do not rely on a classifier for high quality, unlike the models mentioned above.

Both the SR3 & CDM have surpassed the expectations and benchmarks set by their predecessors and can usher in a new wave of innovations in image and audio processing.